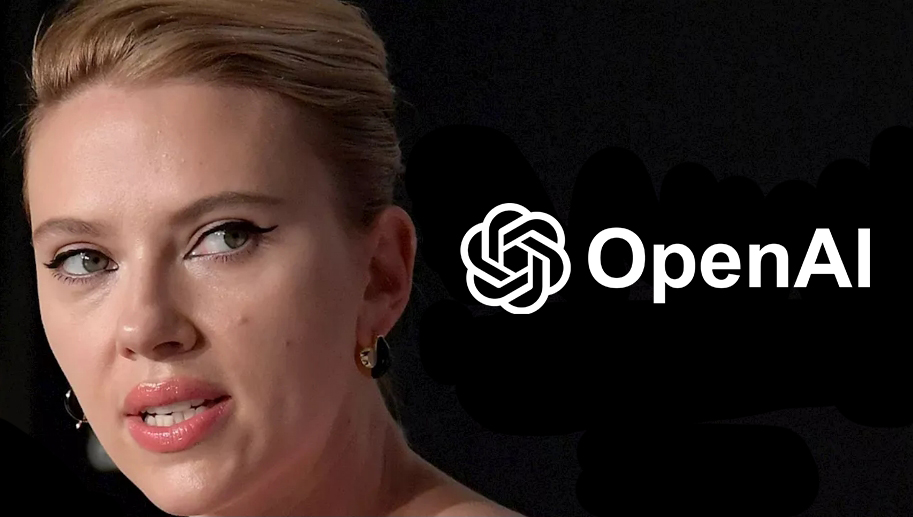

In a plot twist that feels ripped straight out of a dystopian movie, OpenAI is under fire for allegedly cloning Scarlett Johansson’s voice—or at least something that sounds eerily close to it.

This controversy spiraled out of the release of their latest voice model, codenamed Sky, which has been stirring up quite the conversation since its demo.

If you’ve seen the movie “Her” (or even if you haven’t), you likely know that Scarlett Johansson’s sultry, computer-generated voice was central to its charm.

The uncanny similarity between Johansson’s voice in the film and OpenAI’s new Sky model has raised some eyebrows.

OpenAI flaunted their new GPT-4o’s capabilities, featuring a voice model that could perform remarkably human-like interactions. People quickly noticed that the voice bore a striking resemblance to Johansson’s portrayal of the AI Samantha in “Her”.

Naturally, speculation ran wild, suggesting that OpenAI had mimicked her voice without permission.

Johansson didn’t waste time clarifying the matter; she confirmed not only that OpenAI had approached her for the project last September, but also that she declined.

Seeing (or rather, hearing) her indirect involvement caused quite a stir. She was, understandably, none too pleased with the uncanny resemblance, especially when friends and news outlets mistook Sky’s voice for hers.

Things got stickier when it came to light that Sam Altman, CEO of OpenAI, had tweeted a cryptic “her” reference just before the GPT-4o demo—another nudge towards the “Her” connection.

It didn’t help that Altman reached out to her agent days before the release, urging her to reconsider her decision. The dots easily connected themselves in the public eye.

OpenAI quickly went into damage control mode. They issued a statement asserting that Sky’s voice was from a different professional actress, one among five selected from 400 auditions, and not an intentional mimicry of Johansson’s voice.

Yet, this didn’t smooth the waters. The court (of public opinion) seemed set against them, as the voice similarity felt too substantial to be coincidental.

Things took a legal turn. Johansson’s lawyers demanded an explanation of the creation process behind Sky’s voice. The pressure was enough for OpenAI to “reluctantly” remove Sky’s voice from circulation, as Altman issued a public apology, admitting they could have communicated better.

This incident couldn’t have come at a more turbulent time for the AI industry. Concerns about deepfakes, identity theft, and the ethical use of AI technologies are already at high tide, and situations like these bring them crashing into public consciousness.

Voice cloning, while an astonishing technological feat, treads into ethically murky waters—waters where laws haven’t quite caught up yet.

The incident with Johansson may very well become a landmark moment, pushing forward the call for clearer and stricter regulations around the use of AI to replicate individuals’ voices and likenesses.

AI’s rapid advancements demand just as rapid an evolution in public understanding and legislative action. Until then, incidents like this serve as important case studies that reveal both the potential and the pitfalls of AI.

As Johansson herself put it, this is a time when absolute clarity is needed regarding the protection of individual identities.

So, while OpenAI’s intentions might have been to showcase groundbreaking technology, it turns out they’ve also spotlighted the urgent need for ethical considerations and regulatory oversight in the AI landscape.

And as AI continues to evolve, reckon that celebrities and average folks alike will be paying closer attention to what their digital doppelgängers are saying.

OpenAI’s snag with Scarlett Johansson’s voice resemblance throws a sharp spotlight on the thin and often blurry ethical lines in AI development.

It’s a challenging road ahead, balancing innovation’s magic with the respect for personal integrity and consent.