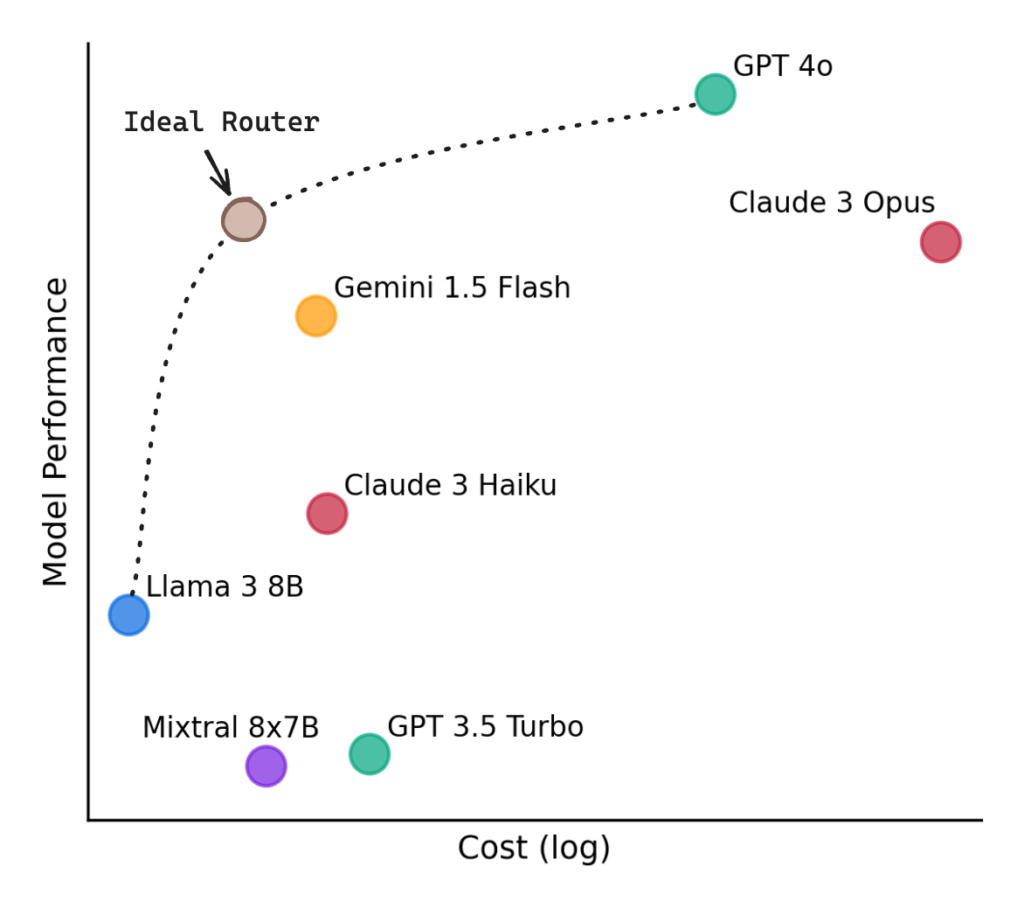

Researchers from UC Berkeley and Anyscale have introduced RouteLLM, an open-source framework designed to optimize the routing of large language model (LLM) queries, balancing cost and performance effectively.

RouteLLM addresses the challenge of efficiently routing queries to the most appropriate LLM, whether a stronger, more expensive model like GPT-4 or a weaker, cost-effective model like Mixtral-8x7B.

The framework employs a sophisticated routing system that uses a 1-5 scoring system to determine the suitability of Mixtral-8x7B for a given query, routing to GPT-4 only when necessary.

The framework’s generalizability was demonstrated by routing between different model pairs, such as Claude 3 Opus and Llama 3 8B, without retraining, indicating its robustness across various models.

RouteLLM’s performance was evaluated on benchmarks like MT Bench, MMLU, and GSM8K, showing significant cost reductions while maintaining high response quality. For instance, the matrix factorization router achieved 95% of GPT-4’s performance with only 26% of the calls to GPT-4, resulting in a 48% cost reduction.

The training process for RouteLLM leverages preference data, comparing response quality between models to understand their strengths and weaknesses. This method ensures that the router can make informed decisions about which model to use for each query.

RouteLLM offers a scalable and cost-effective solution for deploying LLMs, significantly reducing costs while maintaining high-quality responses. The open-source release of RouteLLM, along with its datasets and code, provides a valuable tool for organizations looking to optimize their use of LLMs.