The ethical use of AI training data is under intense scrutiny as issues of bias, data protection, and legal disputes come to the forefront. Recent lawsuits and industry reports highlight the need for robust ethical frameworks and regulatory measures to ensure fairness and transparency in AI development.

A key issue is the presence of bias in AI models, which can arise from unrepresentative or poorly curated datasets. This bias can lead to unfair outcomes and discrimination against certain groups.

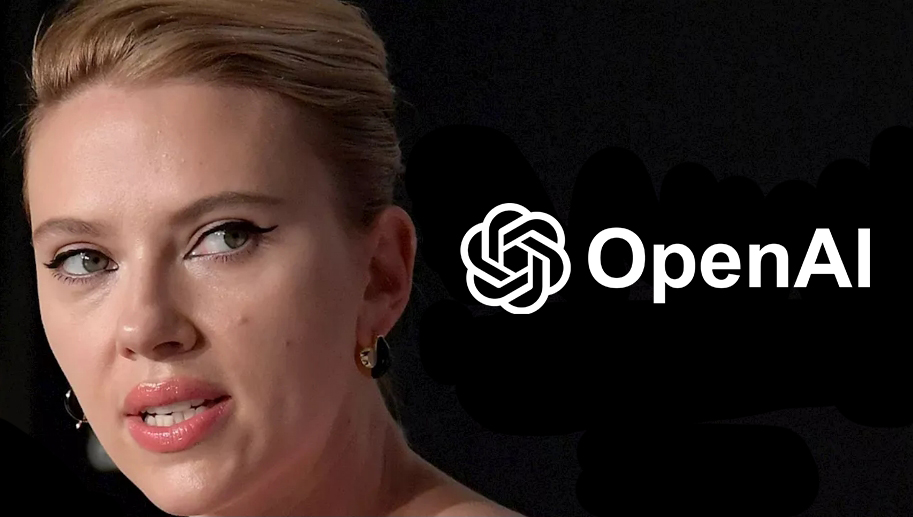

Legal challenges are also mounting, with high-profile lawsuits against major tech companies like Meta and OpenAI. These cases often revolve around the alleged illegal use of copyrighted works in AI training datasets, raising questions about intellectual property rights and the ethical use of data.

To address these issues, companies are urged to adopt comprehensive ethical frameworks. Recommendations include creating tailored risk frameworks, optimizing guidance for product managers, and building organizational awareness around AI ethics. IBM, for instance, has established an AI Ethics Board to oversee the responsible development and deployment of AI technologies.

The debate over AI ethics extends to the treatment of data annotators, who play a crucial role in preparing training datasets. Reports indicate that fair compensation and proper training for annotators are essential to minimize bias and ensure high-quality data.

The use of synthetic data generation is being explored as a means to create diverse and representative datasets without the ethical pitfalls of using real-world data.

As AI continues to evolve, the ethical and legal landscape surrounding training data will likely become more complex.

Future developments may include stricter regulations and more sophisticated ethical guidelines to balance innovation with the protection of individual rights and societal values.