Leading artificial intelligence experts, including two “godfathers of AI,” have issued a stark warning that the world is ill-equipped to handle imminent breakthroughs in AI technology.

The group of 25 experts, which includes Turing Award winners Geoffrey Hinton and Yoshua Bengio, assert that governments have fallen short in establishing adequate regulatory frameworks to manage the potential risks associated with rapidly advancing AI systems.

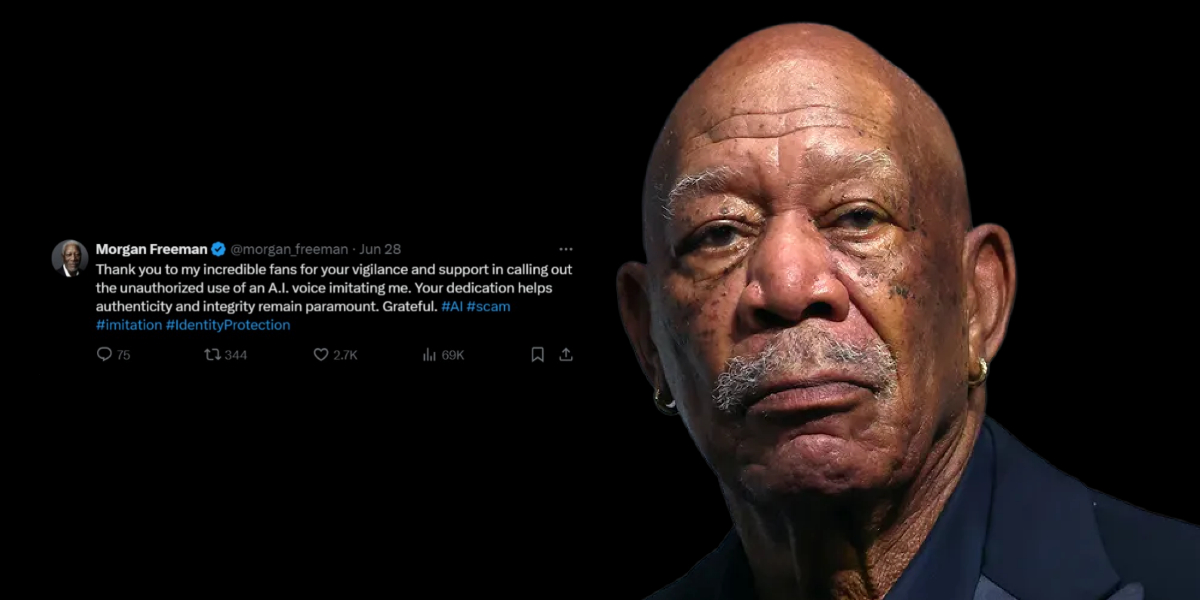

In a paper published in the Science journal, the experts highlight the urgent need for more robust safety measures and governance structures. They argue that the shift towards autonomous AI systems by tech companies could dramatically amplify the technology’s impact on society, potentially leading to large-scale social disruptions, malicious applications, and even an irreversible loss of human control over AI systems.

The experts propose several key recommendations to address these concerns. They call for the implementation of government safety frameworks that can adapt to rapid technological advancements, increased funding for newly established AI safety institutes, and more stringent risk-assessment requirements for tech firms. Additionally, they advocate for restrictions on the use of autonomous AI systems in critical societal roles.

This warning comes at a crucial time, as politicians, experts, and tech executives prepare to gather for a two-day summit in Seoul. The experts emphasize that while there have been some promising initial steps in AI governance, such as the voluntary testing agreement brokered at the Bletchley Park summit and the EU’s AI Act, these measures are insufficient to address the full scope of potential risks.

The paper also highlights the double-edged nature of advanced AI systems. While they have the potential to cure diseases and improve living standards, they also pose significant threats to social stability and could enable automated warfare.

The experts express particular concern about the tech industry’s move towards developing generalist AI systems capable of autonomous action and goal pursuit.

Recent developments in the field, such as OpenAI’s GPT-4o and Google’s Project Astra, demonstrate the rapid progress being made towards more autonomous and capable AI systems. These advancements underscore the urgency of the experts’ call for more comprehensive regulatory measures and safety protocols.

As the world grapples with the implications of these technological leaps, the message from these leading AI experts is clear: without swift and decisive action to improve governance and safety measures, we risk being overwhelmed by the transformative power of artificial intelligence.

The upcoming Seoul summit presents a critical opportunity for world leaders and tech companies to address these concerns and work towards ensuring that AI development proceeds in a manner that is both innovative and responsible.