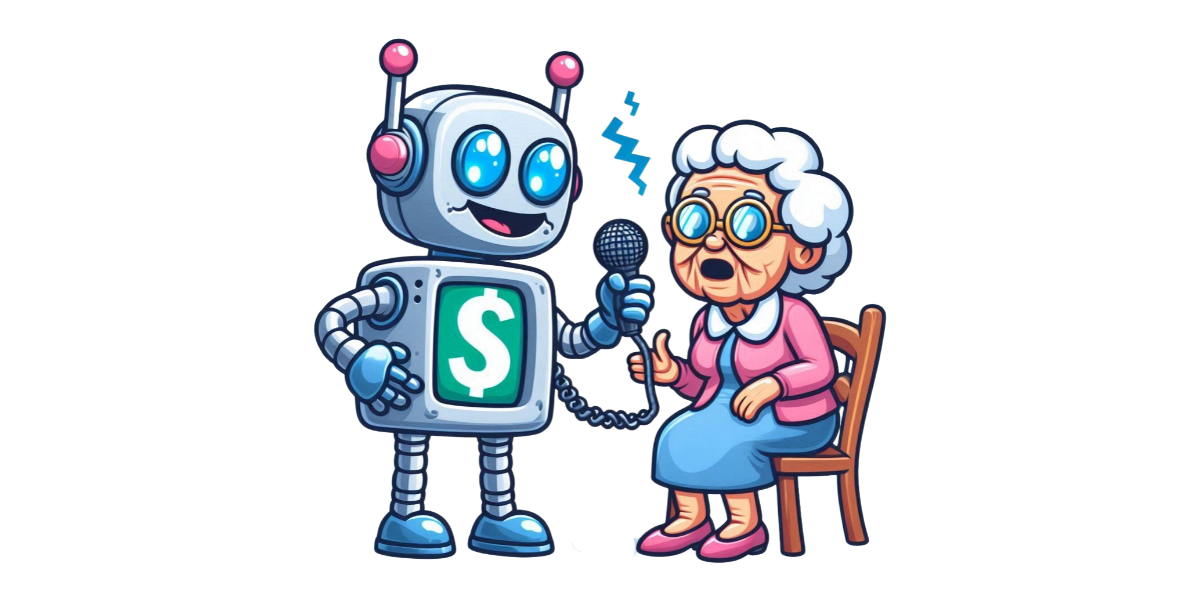

AI-enabled voice fraud is emerging as a significant threat, exploiting advanced voice cloning technologies to deceive victims.

Recent incidents in Canada and the United States highlight the growing sophistication and impact of these scams, which have resulted in substantial financial losses.

AI voice cloning technology has advanced to the point where scammers can convincingly mimic the voices of loved ones, leading to a surge in fraudulent activities. In Canada, criminals used AI to replicate the voices of family members, scamming senior citizens out of more than $200,000.

A mother in Arizona also received a distressing call from what she believed was her daughter, only to discover it was a scammer using AI-generated voice cloning.

The Federal Trade Commission (FTC) has recognized the severity of this issue, launching initiatives such as the Voice Cloning Challenge to address the harms posed by AI-enabled voice cloning technologies 4.

The FTC advises individuals to verify the identity of callers by hanging up and calling back directly, especially if the caller requests money via wire transfer, cryptocurrency, or gift cards.

The FBI has also issued warnings about the increasing threat of AI-powered phishing and voice/video cloning, urging vigilance and the implementation of security measures to mitigate risks.

Experts suggest using code words or phrases known only to family members to verify the authenticity of calls.

As AI voice cloning technology continues to evolve, the potential for misuse in fraudulent activities grows.

Regulatory bodies and law enforcement agencies are actively working to combat these threats, but individuals must remain vigilant and adopt protective measures to safeguard against voice fraud.

Future developments in AI regulation and security protocols will be crucial in addressing this emerging challenge.